Virtual Paper Review – SegementAnything3: Segment Video with Text

February 11 @ 6:00 pm – 7:30 pm

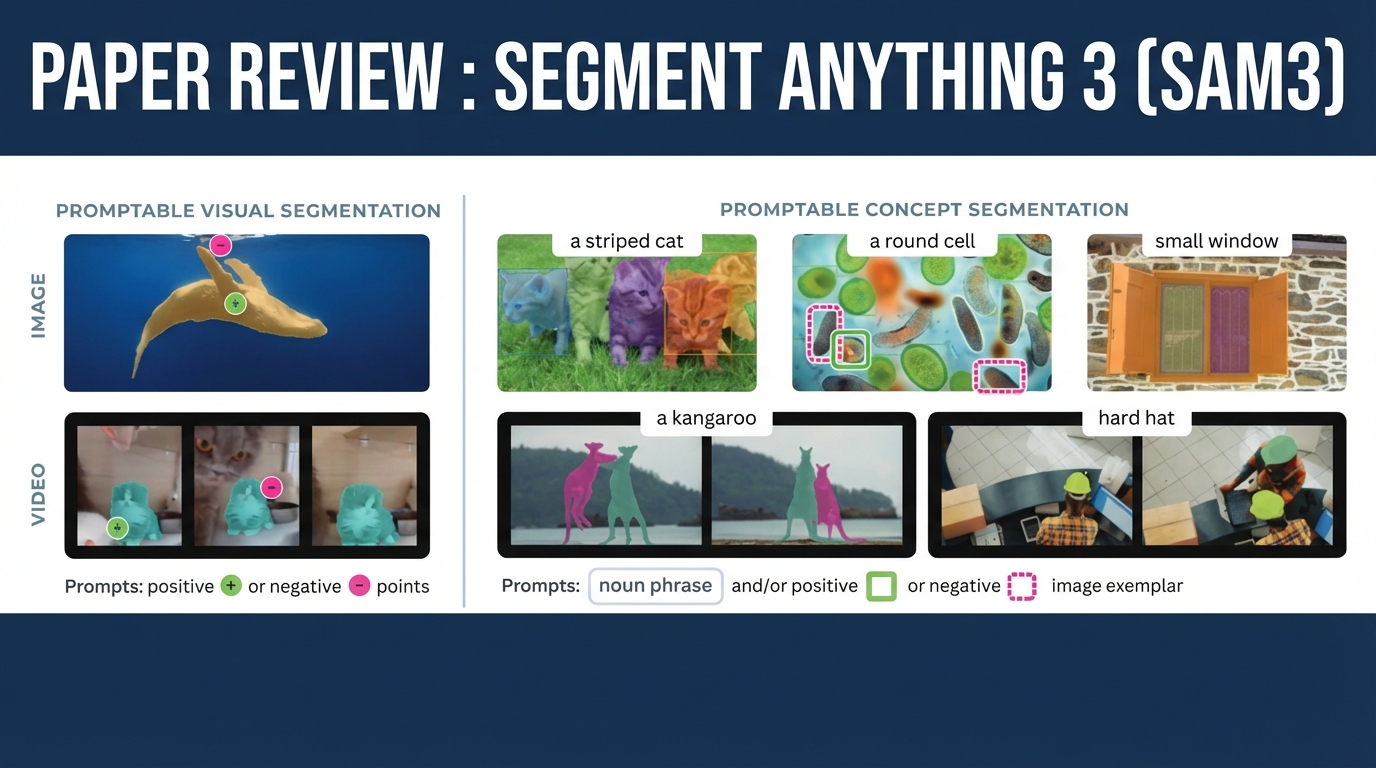

Join us virtually Wednesday Feb 11th at 6 pm CST to continue our monthly Paper Review series! We will be dissecting SAM 3 (Segment Anything Model 3), a unified model that bridges the gap between geometric segmentation (clicks and boxes) and semantic understanding (text and concepts). While previous versions excelled at “segmenting that thing,” SAM 3 introduces Promptable Concept Segmentation (PCS), the ability to find, segment, and track all instances of a specific concept (e.g., “striped cat” or “red apple”) across both images and videos.

Topics we will cover :

- PVS vs. PCS: The evolution from Promptable Visual Segmentation (points/masks) to Promptable Concept Segmentation (noun phrases/exemplars).

- Localization-Recognition Conflict: Why forcing a model to know “where” something is often conflicts with knowing “what” it is, and how open-vocabulary detection has historically struggled with this.

- Data Engine Basics: The role of human-in-the-loop vs. model-in-the-loop pipelines for generating massive-scale segmentation datasets.

- Architecture: How SAM 3 fuses an image-level DETR-based detector with a memory-based video tracker using a shared Perception Encoder (PE) backbone.

- Presence Head: A look at the novel “presence token” that decouples recognition (is the concept in the image?) from localization (where are the pixels?)

- SA-Co dataset (4M unique concepts).

- Video Disambiguation: Strategies for handling temporal ambiguity, including “masklet” suppression and periodic re-prompting during tracking failures.

Links:

- Paper:

https://ai.meta.com/research/publications/segment-anything-model-3-sam-3/ - Code:

https://github.com/facebookresearch/sam3 - Demo:

https://segment-anything.com

Details:

- Date – 02/11/2026

- Time – 6:00 – 7:30 pm

- Location – VIRTUAL

- Google Meet – https://meet.google.com/drf-dydt-mgn